By Victor Bolu @June, 18 2025

Surveys and questionnaires are traditional methods of data collection that involve gathering information through structured question sets. They allow organizations to directly obtain data from individuals and can be tailored to specific research objectives. Advantages include the ability to collect targeted data, cost-effectiveness, and flexibility in survey design. However, drawbacks may include response bias, limited sample sizes, and potential for incomplete or inaccurate responses.

Interviews involve direct communication with individuals or groups to gather qualitative and in-depth data. They can provide valuable insights, capture nuances, and offer a deeper understanding of complex topics. Interviews allow for open-ended questioning and follow-up inquiries. The advantages include rich and detailed data, the ability to clarify responses, and the potential for building rapport. However, interviews can be time-consuming, resource-intensive, and subject to interviewer bias.

Observational studies involve directly observing and recording behaviors, interactions, or events. They can be conducted in controlled environments or real-world settings. Observational data provides firsthand information and can capture natural behaviors. It is useful for studying human interactions, patterns, and environmental factors. Advantages include capturing real-time data, reducing response bias, and providing contextual insights. Challenges may include ethical considerations, potential observer bias, and the limited ability to control variables.

Social media mining involves extracting data from various social media platforms. It allows organizations to collect large-scale, real-time data on public opinions, trends, and user behaviors. Advantages include access to vast amounts of publicly available data, insights into customer sentiment, and the ability to track social trends. However, challenges include data noise, privacy concerns, and the need for sophisticated tools to analyze unstructured data.

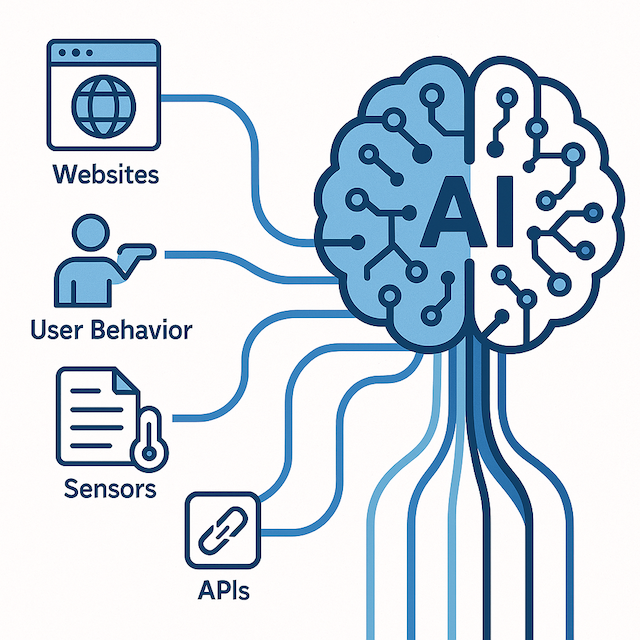

Sensor data collection involves gathering data from physical sensors, such as those found in IoT devices, wearables, or environmental monitoring systems. Sensor data provides real-time information on various parameters, such as temperature, humidity, location, or movement. It enables organizations to collect objective and continuous data. Advantages include accurate and precise measurements, automation possibilities, and monitoring of dynamic processes. Challenges include data integration, scalability, and ensuring sensor accuracy and reliability.

Web scraping entails extracting data from websites using automated tools or scripts. It enables organizations to collect diverse and real-time information from online sources. Web scraping is advantageous for gathering large-scale datasets, tracking competitors, and monitoring market trends. However, ethical considerations, legal constraints, and the need for robust scraping techniques are important factors to consider.

Existing databases and archives, such as government records, academic repositories, or industry-specific data sources, can be valuable resources for AI and ML. These databases offer pre-existing and curated datasets that are relevant to specific research or application domains. Advantages include data reliability, availability of historical data, and reduced data collection efforts. Limitations may include limited access, data quality concerns, and potential biases in existing datasets.